Picture this: you’re tasked with analyzing terabytes of data, extracting valuable insights, and making data-driven decisions. Seems daunting, right? But what if you had a tool that could effortlessly handle this massive workload, allowing you to unlock hidden patterns and trends within your data? Enter PySpark, the Python API for Apache Spark, a powerful engine for large-scale data processing and analytics.

Image: zlib.pub

This guide, Essential PySpark for Scalable Data Analytics PDF, is your roadmap to mastering PySpark. Whether you’re a seasoned data scientist seeking to optimize your workflows or a curious beginner venturing into the world of big data, this comprehensive resource will equip you with the essential knowledge and practical skills needed to confidently navigate the realm of scalable data analytics.

Understanding the Power of PySpark

Why PySpark?

In the age of big data, traditional tools often struggle to cope with the sheer volume and complexity of information. PySpark emerges as a game-changer, seamlessly combining the ease of use of Python with the robust distributed processing capabilities of Apache Spark. This dynamic duo empowers you to:

- Process Terabytes of Data with Ease: PySpark’s distributed architecture allows it to effortlessly handle massive datasets, breaking down processing tasks across multiple machines for lightning-fast execution.

- Perform Complex Analytical Tasks: From data cleansing and transformation to machine learning and real-time analysis, PySpark offers a comprehensive set of tools to tackle a wide range of data-driven challenges.

- Leverage the Familiarity of Python: For those well-versed in Python, the transition to PySpark is remarkably smooth. You can leverage your existing Python skills and libraries to create powerful data analytics pipelines.

Key Concepts: RDDs and DataFrames

At the heart of PySpark lie two fundamental concepts: Resilient Distributed Datasets (RDDs) and DataFrames. Understanding these building blocks is crucial to unlocking the full potential of PySpark.

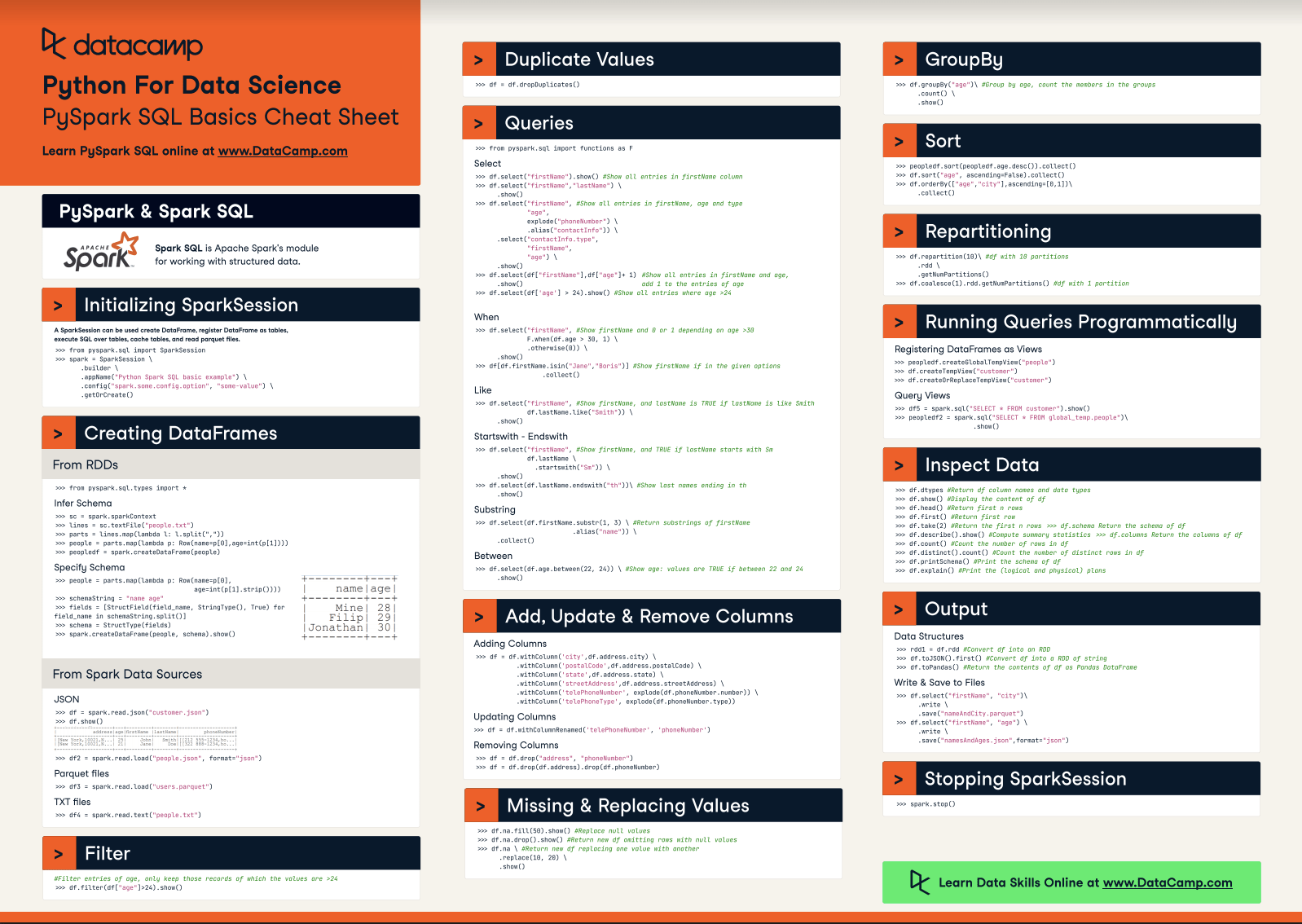

Image: www.datacamp.com

RDDs: The Foundation of Distributed Computing

RDDs are the core data structure in Spark, representing immutable, distributed collections of data. Imagine RDDs as a set of partitions, each holding a chunk of your data, spread across various nodes in your cluster. PySpark enables you to perform operations on these partitions in parallel, maximizing processing efficiency.

DataFrames: Structured Data for Enhanced Analysis

While RDDs provide flexibility, DataFrames introduce structure and schema to your data, making it easier to work with complex datasets. DataFrames are essentially tables with rows and columns, resembling the familiar structure of relational databases. This organization simplifies tasks like querying, filtering, and aggregations, facilitating data analysis.

Essential PySpark Operations for Data Analysis

Data Transformation: Shaping Your Data

PySpark provides a rich set of transformation functions to mold your data into the desired format for analysis. These operations create new RDDs or DataFrames, preserving the original data while applying specific transformations.

Common Transformations:

- map(): Applies a function to each element of an RDD, creating a new RDD where each element is transformed according to the specified function.

- filter(): Creates a new RDD containing only elements that meet a specific condition specified by a filter function.

- flatMap(): Similar to map, but it can generate multiple elements for each input element, allowing for flattening and transformation.

- reduceByKey(): Aggregates values associated with the same key within an RDD, performing a reduction operation defined by a user-specified function.

- join(): Merges two RDDs based on a common key, combining data from both RDDs into a single dataset.

Data Actions: Extracting Insights

Data actions are crucial for interacting with your transformed data and extracting valuable insights. These actions trigger the execution of your PySpark computations, producing concrete results.

Common Actions:

- collect(): Returns all elements of an RDD to the driver program, often used for inspecting small datasets or collecting results for further processing.

- count(): Returns the total number of elements in an RDD.

- take(n): Returns the first n elements from an RDD.

- reduce(): Aggregates all elements in an RDD using a user-defined binary function, summarizing the data into a single value.

- saveAsTextFile(): Saves the contents of an RDD to a text file, allowing you to store and share your processed data.

Harnessing PySpark for Real-World Applications

Customer Segmentation and Analysis

Imagine you have a massive database of customer purchase history. PySpark can help you segment customers based on their purchasing behavior, identify customer churn patterns, and tailor marketing campaigns to specific demographics.

Fraud Detection and Risk Assessment

Detecting fraudulent transactions in real-time is crucial for financial institutions. PySpark can be employed to analyze transaction data, identify suspicious patterns, and alert authorities to potential fraud, reducing financial losses and enhancing security.

Sentiment Analysis and Social Media Monitoring

PySpark can process mountains of social media data, extracting sentiments, trends, and customer feedback. This information is invaluable for brands to gauge public opinion, understand customer preferences, and adapt their marketing strategies.

Recommender Systems

Building intelligent recommender systems that suggest products or content tailored to individual preferences relies on extensive data analysis. PySpark’s powerful capabilities enable the processing of user interactions, product data, and other relevant information to develop accurate and personalized recommendation engines.

Essential Pyspark For Scalable Data Analytics Pdf

Unlocking the Power of PySpark: Your Next Steps

This guide has only scratched the surface of PySpark’s vast potential. Armed with this foundational knowledge, you’re ready to dive deeper into the world of scalable data analytics.

Explore the rich online resources, follow tutorials, and get hands-on experience with PySpark. Join communities, engage in discussions, and learn from experts in the field. As you progress, you’ll discover how PySpark not only empowers you to deal with large-scale data effectively but also unlocks insights that can drive significant business value.

Embrace the power of PySpark, and unlock a world of possibilities in the realm of big data analytics!